Total Page Preview: 000000006713

Create Azure Data Factory Using Portal

In this article we will create demo for the Azure data factory using azure portal.

For this article I created Azure Storage account for the source and azure SQL database for the destination data. In Azure Storage created blob and upload product.txt file. Below given products data copy and save products.txt file and upload into products (container) in azure blob storage.

ProductId,ProductName,Quantity,City

1,Laptop,10,Noida

2,Mobile,12,Noida

3,Desktop,22,Noida

4,Laptop,23,Delhi

5,Mobile,34,Delhi

6,Desktop,7,Delhi

7,Laptop,87,Noida

8,Mobile,66,Noida

9,Laptop,45,Delhi

Once you done above process then you folow bellow.

Create Data Factory using portal:

Step : 1. Log in to the Azure portal

Step : 2. Click NEW on the left menu, click Data + Analytics, and click Data Factory.

Step : 3. In the New data factory blade, enter SampleAzureDataFactory for the Name.

Note: The name of the Azure data factory must be globally unique. If you receive the error: Data factory name “SampleAzureDataFactory” is not available. Change the name of the data factory (for example, yournameGetStartedDF) and try creating again.

Once you create successfully Azure data factory then you will get below screen:

Step : 4. Click on Azure data factory which you have created. After you click on Author and deploy which is given above screen.

Once you will click on Author and deploy then get below given screen shot:

Step : 5. LinkedService - create Source Azure Storage Linked Service for the connect your blob storage

{

"name": "SourceAzureStorageLinkedService",

"properties": {

"description": "Source Linked Service create for the connect blob storage.",

"type": "AzureStorage",

"typeProperties": {

"connectionString": "DefaultEndpointsProtocol=https;AccountName=ithubcity1;AccountKey=**********"

}

}

}

Step : 6. LinkedService - you Create Destination Azure SQL Database LinkedService for the connect your Azure sQL Database.

{

"name": "DestinationAzureSqlLinkedService",

"properties": {

"description": "Destination Linked Service create for the connect azure sql .",

"type": "AzureSqlDatabase",

"typeProperties": {

"connectionString": "Data Source=tcp:azuresqlserver.database.windows.net,1433;Initial Catalog=db_Products;Integrated Security=False;User ID=IT7266922;Password=**********;Connect Timeout=30;Encrypt=True"

}

}

}

You have created Source and destination LinkedService for the connection string.

Step : 7. Datasets - create source Datasets for the represent you source data structure:

{

"name": "SourceAzureBlobDataset",

"properties": {

"structure": [

{

"name": "ProductId",

"type": "Int64"

},

{

"name": "ProductName",

"type": "String"

},

{

"name": "Quantity",

"type": "Int64"

},

{

"name": "City",

"type": "String"

}

],

"published": false,

"type": "AzureBlob",

"linkedServiceName": "SourceAzureStorageLinkedService",

"typeProperties": {

"fileName": "Products.txt",

"folderPath": "products",

"format": {

"type": "TextFormat",

"columnDelimiter": ",",

"firstRowAsHeader": true

}

},

"availability": {

"frequency": "Minute",

"interval": 15

},

"external": true,

"policy": {}

}

}

Step : 8. Create table into your azure sql database which you have created for the destination

CREATE TABLE [dbo].[tblProducts]

(

[ProductId] [int] NULL,

[ProductName] [varchar](50) NULL,

[Quantity] [int] NULL,

[City] [nvarchar](50) NULL

)

Step : 9. Datasets - create Destination Datasets for the represent your destination data structure which you created in azure sql table:

{

"name": "DestinationAzureSQLDataset",

"properties": {

"structure": [

{

"name": "ProductId",

"type": "Int64"

},

{

"name": "ProductName",

"type": "String"

},

{

"name": "Quantity",

"type": "Int64"

},

{

"name": "City",

"type": "String"

}

],

"published": false,

"type": "AzureSqlTable",

"linkedServiceName": "DestinationAzureSqlLinkedService",

"typeProperties": {

"tableName": "[dbo].[tblProducts]"

},

"availability": {

"frequency": "Minute",

"interval": 15

},

"external": true,

"policy": {}

}

}

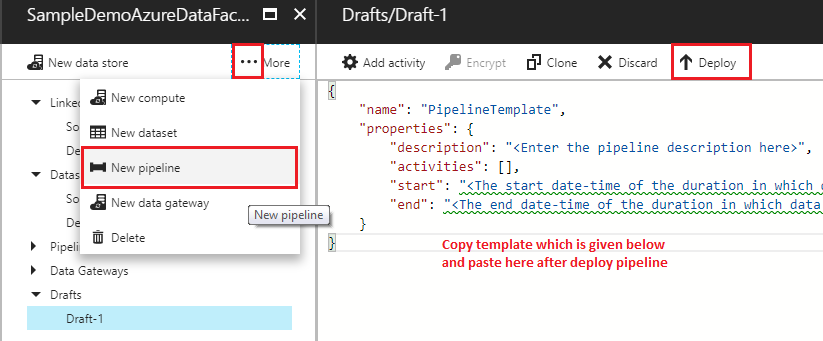

Step : 10. Pipeline - Create pipeline is a group of activities. Together, the activities in a pipeline perform a task.

{

"name": "PipelineTemplate",

"properties": {

"description": "pipe line create for the make activity",

"activities": [

{

"type": "Copy",

"typeProperties": {

"source": {

"type": "BlobSource",

"recursive": false

},

"sink": {

"type": "SqlSink",

"writeBatchSize": 0,

"writeBatchTimeout": "00:00:00"

},

"translator": {

"type": "TabularTranslator",

"columnMappings": "ProductId:ProductId,ProductName:ProductName,Quantity:Quantity,City:City"

}

},

"inputs": [

{

"name": "SourceAzureBlobDataset"

}

],

"outputs": [

{

"name": "DestinationAzureSQLDataset"

}

],

"policy": {

"timeout": "1.00:00:00",

"concurrency": 1,

"executionPriorityOrder": "NewestFirst",

"style": "StartOfInterval",

"retry": 1,

"longRetry": 0,

"longRetryInterval": "00:00:00"

},

"scheduler": {

"frequency": "Minute",

"interval": 15

},

"name": "Activity-0-Products_txt->[dbo]_[tblProducts]"

}

],

"start": "2017-07-20T10:10:43.355Z",

"end": "2099-12-30T18:30:00Z",

"isPaused": false,

"pipelineMode": "Scheduled"

}

}

Now you have created successfully azure data factory.

Go your Azure sql for the OUTPUT:

Thank You

About Author

Brijesh Kumar

Database Developer

I have more then 6 years Experience in Microsoft Technologies - SQL Server Database, ETL

Azure Cloud - Azure SQL Database, CosmosDB, Azure Data Factory, PowerBI, Web Job, Azure Function, Azure Storage, Web Apps, Powershall

and Database Migration On-Premise to Azure Cloud.

LinkedIn : https://www.linkedin.com